OODA in the age of weak (and strong) LLMs (DRAFT)

How can LLMs improve decison making capabilities? And how will our use of them as decision-augmenters change over time, as we go from our current GPT-4 class models (weak models) to the forthcoming model regime (strong models)? In order to answer this, we will take Boyd's OODA framework and theorize how each of it's pillars benefit from both weak and strong LLMs. The ultimate goal of this exploration is to discover tactics that can help help decision makers today.

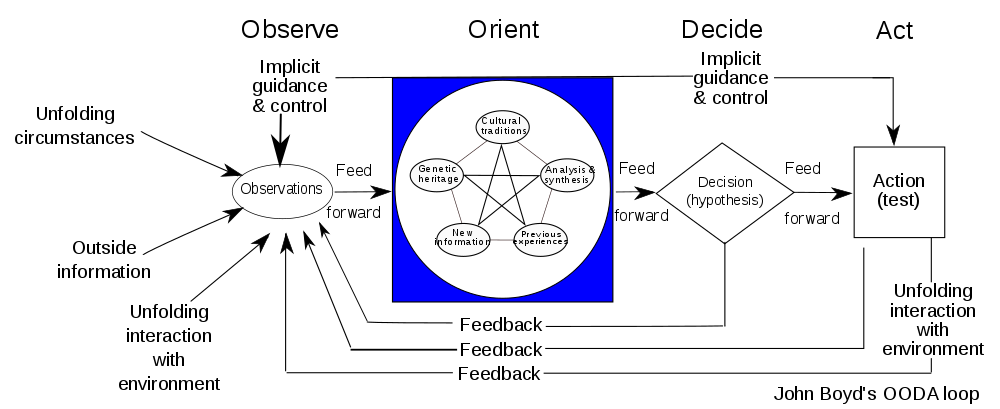

First, a short blurb on OODA: Boyd posits that in an adversarial context, if you can take in and process information from relevant channels (OBSEVE + ORIENT), decide what to do with that processed information (DECIDE), and then act on those decisions (ACT) faster than your adversary, you should be able to 'win'. Having a tight OODA loop can confer an advantage in say, a jet dogfight, or when at a small startup trying to find PMF. And what Boyd is saying should be intuitive: if you can take well-calibrated actions faster than your opponent, you have a shot at winning whatever game your playing.

But having the tightest feedback loop is neither neccesary nor sufficient condition for winning a strategic game: opponents may have some overwhelming material advantage that no nimbleness may overcome, or the game may be already decided (in chess parlance, your opponent may already have mate in 4). OODA is nonetheless an interesting framework to think about how decision makers make and act on their decisions, and I think the ideas presented below can be applied in various strategic scenarios.

Ultimately, we make decisions based on information, and information can either: 1. come from known channels, or 2. not come from unknown channels. The best information needed to make a decision is contained in both known and unknown channels. LLMs can help us improve the fidelity of information from known channels, help us discover unknown channels, and help us create new channels.

Known Channels

If LLMs will help us process information (a claim that will be established in the ORIENT section below), then there are now strong incentives for us to collect *more* information from channels we already use. The friend.com pendant, Hu.ma.ne, Limitless (FKA Rewind.ai) and Microsoft's new [TODO] initiative promise to record every thing that happens on ones laptop screen, with the hope that current or future systems will be able to mine insights from this high-fi data.TODO: While these systems focus on general purpose use, expect to see more domain specific "full-recording" software on the market soon. OpenAI's entry into the space with a desktop app hints at the possbility that

LLMs reduce the friction to create information gathering scripts. Writing a bot that monitored a twitter topic, a google scholar keyword, or a set of news publications has existed well before LLMs. But LLMs in their current form reduce the friction to the creation of these scripts, and LLMs from a stronger regime will make this style of monitoring ~zero friction.

And in fact, there is a budding market for tools which wrap domain-specific LLM-driven data collection. For example, Campana sells a product that monitors changes to competitor's websites and docs, sending you a weekly summary of product launches, changes in language, pricing, and pivots. Expect this vertical to grow to cover aggregators like facebook, twitter, and reddit, as well as channels like google scholar, arxiv, and market reports. As we move into the strong regime, expect everyone will have a topic / domain specific research-assistant on steroids in their pocket. There is a temporary opportunity here in the interim, as this type of tooling will not be uniformly distributed and players with access to the best of these tools have strong incentives to keep them close.

Finally, the drive towards LLMs drove advances into speech-to-text, such that in 24' tools like whisper have essentially solved the problem (although some nagging sub-problems around timestamps and diatriziation (figuring out who is speaking) remain). All recorded audio can now be indexed. Expect call recording software like zoom, firefly.ai to improve to perfection in the next year.

The most interesting entry into this space is Granola, who enrich your hand-written or hand-typed notes with information from the transcript. Centaur chess is chess played between humans who are allowed a computer assistant. Granola is a kind of like the centaur-version of the auto-notetaking app -- it marries the human judgement around what notes are *most important* to take with the perfect recall of the whisper-powered transcriber. Expect to see more of this "cenatuar" pattern across more product verticals in the coming months.

Index everything -- extend.app takes all unstructure data from a business and makes it structured. Expect the current document storage titans like Google Drive and MSFT Drive to enter this space.

Unknown Channels / New Channels

While weak LLMs can be a helpful thought partner in determining channels that might contain relevant information ("Hey Claude, [context] is my current information diet for my [task/role], can you help me think of additional streams?"), strong LLMs that power research agents will yield the most fruit here. An autonomous agent can monitor existing data streams,

Weak LLMs have never made it easier to create new information streams

User research, scaled Reduce friction to running ads, meeting schedulers w/ 100 people, finding the correct people to talk to "super charged appolo", bland.ai for calls to collect feedback, outset.ai

Information is gleaned from channels. LLMs 0. with immediate response from env - whisper on calls - new entrypoints (smart reminders [even emails] to write notes after a known meeting) - rewind style recordings 1. within known channels - reduce friction to write a script that monitors twitter - reduce friction to scrape 2. within unkown channels - agent that can actively seek new channels 3. creation of new channels: reduce friction to sending out a survey to hundreds of people, or running ads

In machine learning, we often trade off `recall` and `precision`. A quick primer: if you do a google search, you hope that A. all of the most relevant websites are retrieved in the first 10 results, and that *only* relevant websites are returned in the results. If all of the relevant information is present in the results, we have high recall, and if *only* relevant results are returned, we have high precision. In the OODA context, we want our OBSERVE step to be high-recall, and we need our ORIENT step to prune down all the information to only the information relevant for a decision.

The economist Herbert Simon articulates this well:

The design principle that attention is scarce and must be preserved is very different from the principle of “the more information the better.” … The proper aim of a management information system is not to bring the manager all the information he needs, but to reorganize the manager’s environment of information so as to reduce the amount of time he must devote to receiving it."

LLMs are great at classifying and summarizing information streams. A great example of this is around product feedback. LLMs can generate a list of themes (i.e "This software is slow"), pull the most powerful verbatim quotes that describe each theme, count the themes across thousands of items, and graph them over time. The system writes a digestible summary and sends it to stakeholders at their prefered times.

"Smart" Map Reduce

We can imagine a "high precision" LLM classifier going across all of the pieces of information from across all of the various channels, aggregating it into one or a set of reports for decision makers.

Smart Notifications

Agents that listen

While a lot of the ORIENT things, like writing web scrapers, or notifications, could probably have been done prior to 23', it's just a lot easier now post chatGPT, within the ORIENT category, a lot of these are much more powerful unlocks

Decisions presume a vision for what we are and why we are doing something. LLMs can help w A. Two way doors B. One way doors C. No doors (does this need to be a decision?) (tie to vision) 1. War Gaming 2. Debating 3. Decision Narrowing / Decision constraints (here are 3, pick one) 4. contextual notifaction / reminder systems: a bezos or jobs in your pocket. is this a one way or two way door? better to act 5. random promotion of decision arbiter among small group 6. RAG on historical similarity

Decide marrys the "What is" from the OBSERVE / ORIENT step to the "What ought to be".

This is Chief Strategy Officer (CSO), or CEO in one's pocket

This is where ENCORE lives, also where consulting lives

1.COPILOT for X Making 2 week tasks turn into 4 hour tasks

"DO the thing" services companies, building leverage through things like harvey.ai, 10x cost reduction in many of the things that once cost a lot of money / time

Towards automatic deliverable creation (any of the gov contract pitching stuff, )

Data silos / regulatory constraints become a moat for strong AI's here, possible that we see strong AI's hiring other strong AI's for things that the allocator cannot do efficiently

Ground this in a specific or two: 1. What product are we supposed to work on?

Open Quesitons:

1. can companies tackle these components independently, or need they

be tightly integrated.

2. Solve for the equilibriam. What is the consequence of all economic

actors having access to strong-AI powered decision augmenters?

Write this as a story

Reads: Gopal, Dschurm, DD, jxnl, ilan, roon?

Aside: OODA-bot at the employee, team, division, and company level. Meta-OODA bot can ladder up insights all the way up from the individual. This extends to the world where most of the employees are agents